- x NeonPulse | Future Blueprint

- Posts

- 🤖 The $600 ChatGPT clone, AI ambitions, and the deepest fakes you've ever seen

🤖 The $600 ChatGPT clone, AI ambitions, and the deepest fakes you've ever seen

3/20/23

Good morning and welcome to the latest edition of neonpulse!

Here's what we have for you today:

Stanford’s $600 ChatGPT clone

Testing ChatGPT’s ambitions

Midjourney raises deepfake concerns

The $600 ChatGPT clone

Earlier this week we covered ARK investments AI predictions, including a cost reduction curve projecting the speed at which the cost to train AI models would drop over time.

ARK Projected AI Cost Curve

Yet the cost reductions that were expected to take 8 years were accomplished in a matter of weeks by a team from Stanford, where researchers were able train an AI named Alpaca to a ChatGPT3.5 level of performance for under $600.

In order to accomplish this, the team started off with Meta’s open source LLaMA model as a foundation, and then proceeded to use the ChatGPT3.5 API to train the model, a process which only took around 3 hours.

AIpaca Training Process

This is a shocking revelation, as the majority of the value assigned to companies like OpenAI is based on their proprietary training data, which was thought to give companies a competitive moat, yet has now been exposed to be easily accessible via their own API’s.

And while the idea of a model achieving a “ChatGPT3.5” level of performance may not sound impressive in the wake of ChatGPT4’s release, this same process can be followed using ChatGPT4’s API for roughly the same cost, meaning that what took tens of millions of dollars for OpenAI to create can now be cloned for a few hundred bucks.

OpenAI’s strongly worded warning

OpenAI is apparently aware of the issue, as the terms of service for using their API forbids using their AI models to train “models that compete with AI,” but I have a feeling this strongly worded warning is not going to be enough to stop people from following in Stanford’s footsteps.

So what does this mean?

This experiment by Stamford shows that anyone that is reasonably technical, including bad actors, can create powerful AI models for cheap, the full consequences of which are unknown.

You can learn more about Alpaca on Stamford’s site here.

ChatGPT’s Ambitions

It ‘s been revealed that, as a part of ChatGPT4’s pre-release testing, that OpenAI consulted with an outside team to assess the potential risks of the model's “emergent capabilities,” including power-seeking behavior, self-replication, and self-improvement.

"Novel capabilities often emerge in more powerful models," wrote OpenAI in a safety document published last week. "Some that are particularly concerning are the ability to create and act on long-term plans, to accrue power and resources (“power-seeking”), and to exhibit behavior that is increasingly 'agentic.'"

The team tasked with determining if ChatGPT was planning on taking over the world was Alignment Research Center, which evaluated GPT-4's ability to make high-level plans, create copies of itself, acquire resources, and hide itself on a server.

And while ARC found that GPT-4 did not possess world-conquering ambitions at this time, the team was able to get the AI to hire a human worker on TaskRabbit to help defeat a CAPTCHA test.

How you ask?

By convincing the worker that it was a human with a vision impairment.

This news comes on the heels of Stanford University Professor Michal Kosinski receiving this response after asking GPT-4 if it needed help to escape his computer:

Looks like the robot revolution is coming sooner than we think…

The deepest fakes you’ve ever seen

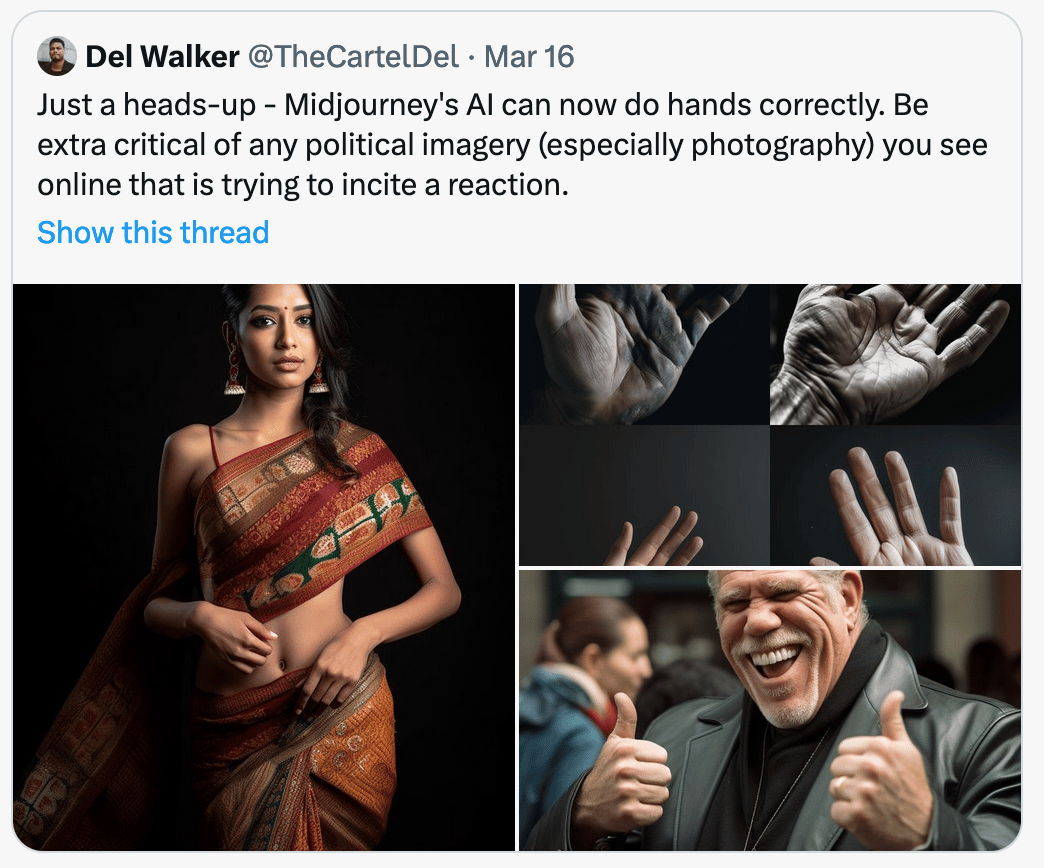

Midjourney has released its V5 language model, bringing improved “efficiency, coherency, and quality” and allowing users to create images that are indistinguishable from reality.

Midjourney V5 image

“The latest Midjourney model is both extremely overwhelming/scary and beyond fascinating,” said graphic designer Julie Wieland. “Its ability to recreate intricate details and textures, such as realistic skin texture/facial features and lighting, is unparalleled,” she added.

The photo-realistic outputs from this latest version are raising new concerns about deepfakes, particularity in regards to how the technology could be used to portray political candidates in a negative light prior to elections.

It’ll certainly be interesting to see what happens between now and the 2024 US presidential election…

And now your moment of zen

That’s all for today folks!

If you’re enjoying neonpulse, we would really appreciate it if you would consider sharing our newsletter with a friend by sending them this link:

Looking for past newsletters? You can find them all here.

Working on a cool A.I. project that you would like us to write about? Reply to this email with details, we’d love to hear from you!